Install and configure Snowflake JDBC driver

This operation should only be executed by your database administrator.

You can install a new JDBC driver by registering a data source and adding the JDBC driver in the procedure. Once you have installed the JDBC driver, you can use it to register other data sources of the same type.

Prerequisites

- You have downloaded the JDBC driver of your choice as an archive file (for example, ZIP or JAR).

Tip You can find a wide range of drivers on Collibra Marketplace. - You have configured one or more Jobservers in Collibra Console. If there is no available Jobserver, the Register data source actions will be grayed out in the global create menu of Collibra Data Governance Center.

Steps

-

In the main menu, click

Catalog.

Catalog.

The Catalog Home opens. -

In the main menu, click the Create button.

The Create dialog box appears. - In the Create dialog box, click Register data source (use a Collibra provided driver).

- If a JDBC driver is already installed for your data source:

- Enter the schema properties.

Field Description Schema name This name is used in Collibra DGC as schema asset and must therefore be unique.

Schema description The description of the schema. This is used as description of the schema asset. Data owner The owner of the registered data in Collibra DGC. - Click Next.

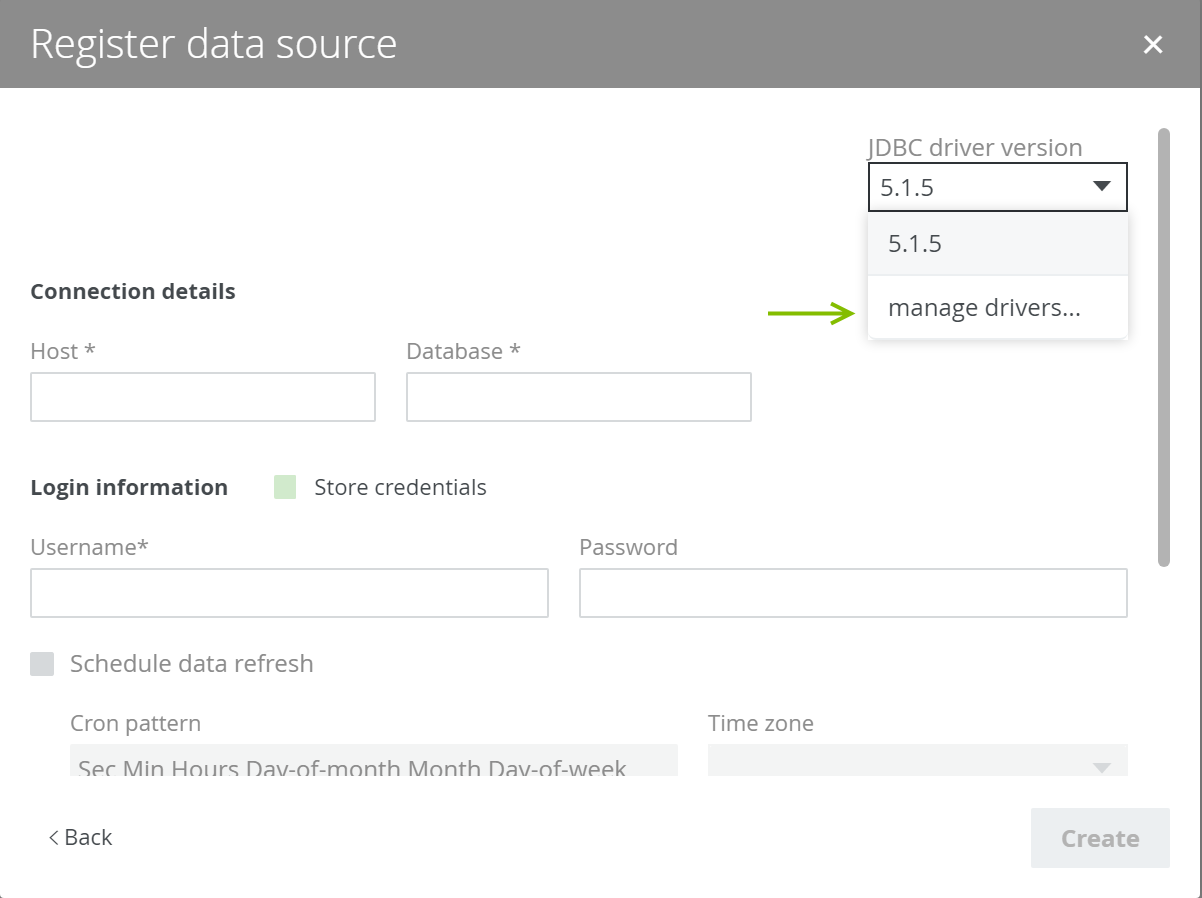

- In the JDBC driver version field, click manage drivers....

- Enter the schema properties.

- Do one of the following:

- Click Add JDBC Driver if you want to create a new JDBC driver.

- Click

if you want to edit an existing JDBC driver.

if you want to edit an existing JDBC driver.

- Enter the required information.

Field Description JDBC Driver Version Name The name of the JDBC driver.  Upload

UploadButton to navigate to the driver file in JAR or ZIP format and upload it.

Driver files

This table contains a list of uploaded driver files in JAR or ZIP format.

You can remove a driver file by clicking

.

. - Click Next.

- Configure the JDBC connection.

Field Description Connection The JDBC connection string is:

jdbc:snowflake:Driver Class Name Driver class name of the connection:

cdata.jdbc.snowflake.SnowflakeDriverConnection properties Connecting to Snowflake

In addition to providing authentication (see below) set the following properties to connect to a Snowflake database:

- Url: Both AWS and Azure instances are supported. For example:

- AWS: https://myaccount.region.snowflakecomputing.com

- Azure: https://myaccount.region.azure.snowflakecomputing.com

Account is only required if your Url does not conform to the usual syntax containing the account name at the beginning. Snowflake provides the Account name needed in this case.

Optionally, you can set Database and Schema to restrict the tables and views returned by the driver.

Authenticating to Snowflake

The driver supports Snowflake user authentication, federated authentication, and SSL client authentication. To authenticate, set User and Password, and select the authentication method in the AuthScheme property.

Authenticating with Password

Set User and Password to a Snowflake user and set AuthScheme to PASSWORD.

Authenticating with Key Pair

The driver allows you to authenticate using key pair authentication by creating a secure token with the private key defined for your user account. To connect with this method, set AuthScheme to PRIVATEKEY and set the following values:

- User: The user account to authenticate as.

- PrivateKey: The private key used for the user such as the path to the .pem file containing the private key.

- PrivateKeyType: The type of key store containing the private key such as PEMKEY_FILE, PFXFILE, etc.

- PrivateKeyPassword: The password for the specified private key.

Authenticating with Okta

Set the AuthScheme to Okta. The following connection properties are used to connect to Okta:

- User: Set this to the Okta user.

- Password: Set this to Okta password for the user.

- MFAPasscode (optional): Set this to the OTP code that was sent to your device. This property should be used only when the MFA is required for OKTA sign on.

The following SSOProperties are needed to authenticate to Okta:

- Domain: Set this to the OKTA org domain name.

- MFAType (optional): Set this to the multi-factor type. This property should be used only when the MFA is required for OKTA sign on. This property accepts one of the following values:

- OKTAVerify

- SMS

- APIToken (optional): Set this to the API Token that the customer created from the Okta org. It should be used when authenticating a user via a trusted application or proxy that overrides OKTA client request context.

The following is an example connection string:

AuthScheme=OKTA;User=username;Password=password;Url='https://myaccount.region.snowflakecomputing.com';Warehouse=My_warehouse;SSO Properties='Domain=https://cdata-okta.okta.com';

The following is an example connection string for OKTA MFA:

AuthScheme=OKTA;User=username;Password=password;MFAPasscode=8111461;Url='https://myaccount.region.snowflakecomputing.com';Warehouse=My_warehouse;SSO Properties='Domain=https://cdata-okta.okta.com;MFAType=OktaVerify;';

Authenticating with AzureAD

Set the AuthScheme to AzureAD. The following connection properties are used to connect to AzureAD:

- User: Set this to your AD user. When connecting, your browser will open allowing you to login to Azure AD to complete the authentication.

The following is an example connection string for AzureAD:

AuthScheme=AzureAD;Url=https://myaccount.region.snowflakecomputing.com;User=user@domain.onmicrosoft.com;

Authenticating with PingFederate

Set the AuthScheme to PingFederate. The following connection properties are used to connect to PingFederate:

- User: Set this to your PingFederate user, the user should been added to PingFederate Data Stores. When connecting, your browser will open allowing you to login to PingFederate to complete the authentication.

The following is an example connection string for PingFederate(Assuming that Active Directory is used as a Data Store):

AuthScheme=PingFederate;Url=https://myaccount.region.snowflakecomputing.com;User=myuser@mydomain;Account=myaccount;Warehouse=mywarehouse;

Authenticating with OAuth

To authenticate with OAuth, set the AuthScheme to OAuth. You can authenticate by Creating a Custom OAuth App to obtain the OAuthClientId, OAuthClientSecret, and CallbackURL connection properties.

See Using OAuth Authentication for an authentication guide.

Configuring Access Control

If the authenticating user maps to a system-defined role, specify it in the RoleName property.

Click here for a complete overview of the available connection properties.

- Url: Both AWS and Azure instances are supported. For example:

- Click Create.

- In the Register data source dialog box, enter the schema properties.

Field Description Schema name This name is used in Collibra DGC as Schema asset and must therefore be unique.

Schema description The description of the schema. This is used as description of the Schema asset. Data owner The owner of the registered data in Collibra DGC. - Click Next.

- Enter the database connection properties.

Option Description JDBC driver version

The JDBC driver to connect to your database.

Connect via

The jobserver used for ingesting.

URL

Address of the used database. Use the format hostname:port.

... <The connection properties that are defined in JDBC driver> ... Store credentials

Select this option to store the credentials to access the database. With a schema refresh, you can clear this option again.

Username Username to access the database. Password Corresponding password to access the database. Schedule data refresh

Enable or disable a schedule to automatically refresh the data registration. Cron pattern Schedule of the data refresh as a Cron pattern.

If you create an invalid Cron pattern, Collibra Data Governance Center stops responding.

Time zone The time zone of the database. Note If Collibra DGC cannot connect to the database, you cannot continue the data source registration wizard.

Depending on the selected database, some of the options are not available. - Click Next.

-

Select the data profiling options.

Option Description Store Data Profile

Option to perform data profiling on the registered data. Detect advanced data types Option to detect advanced data types in the data source. Store Sample Data

Option to extract sample data from the registered data. - Click Create.

Advanced settings

Connection properties

The connection properties are the various options that can be used to establish a connection. This section provides a complete list of the options you can use. Click the links for further details.

| Property | Description |

| AuthScheme | The authentication scheme used. Accepted entries are Password, OKTA, PrivateKey, AzureAD, OAuth, or PingFederate. |

| Account | The Account provided for authentication with Snowflake database. This is usually derived from the URL automatically. |

| Warehouse | The name of the Snowflake warehouse. |

| User | The username provided for authentication with the Snowflake database. |

| Password | The user's password. |

| URL | The URL of Snowflake database. |

| MFAPasscode | Specifies the passcode to use for multi-factor authentication. |

| RoleName | The role of the Snowflake user: PUBLIC, SYSADMIN, or ACCOUNTADMIN. |

| CredentialsLocation | The location of the settings file where credentials are saved. |

AuthScheme

The authentication scheme used. Accepted entries are Password, OKTA, PrivateKey, AzureAD, OAuth, or PingFederate.

Possible Values

Password, OKTA, PrivateKey, AzureAD, OAuth, PingFederateData Type

string

Default Value

"Password"

Remarks

The driver supports the following authentication mechanisms. See the Getting Started chapter for authentication guides.

- Password: Set this to authenticate with a Snowflake user.

- OKTA: Set this to use the OKTA SSO identity provider. Set SSOProperties in addition to the User and Password you use to authenticate to OKTA.

- AzureAD: Set this along with User to use the Azure Active Directory identity provider. When connecting, your browser will open allowing you to login to Azure AD to complete the authentication.

- PingFederate: Set this along with User to use the PingFederate SSO identity provider. When connecting, your browser will open allowing you to login to PingFederate to complete the authentication.

- PrivateKey: Set this to use key pair authentication. Set PrivateKey, PrivateKeyPassword and PrivateKeyType in addition to authenticate with key pair authentication.

- OAuth: Set this to use oauth authentication. Set OAuthClientId, OAuthClientSecret to the Snowflake OAuth credentials. Additionally, set InitiateOAuth to GETANDREFRESH. Note that the CData driver always uses PKCE with OAuth for extra security.

Account

The Account provided for authentication with Snowflake database. This is usually derived from the URL automatically.

Data Type

string

Default Value

""

Remarks

The Account provided for authentication with Snowflake database. This is usually derived from the URL automatically and will not need to be set manually. A notable exception is Snowflake VPS if your Account name doesn't follow the usual URL syntax https://myaccount.region.snowflakecomputing.com. Snowflake provides the Account name in this case.

Warehouse

The name of the Snowflake warehouse.

Data Type

string

Default Value

""

Remarks

The name of the Snowflake warehouse.

User

The username provided for authentication with the Snowflake database.

Data Type

string

Default Value

""

Remarks

The username provided for authentication with the Snowflake database.

Password

The user's password.

Data Type

string

Default Value

""

Remarks

The password provided for authentication with Snowflake.

URL

The URL of Snowflake database.

Data Type

string

Default Value

""

Remarks

Set this property to the URL of the Snowflake database instance.

AWS Format:

https://myaccount.region.snowflakecomputing.com

Azure Format:

https://myaccount.region.azure.snowflakecomputing.com

MFAPasscode

Specifies the passcode to use for multi-factor authentication.

Data Type

string

Default Value

""

Remarks

Specifies the passcode to use for multi-factor authentication.

RoleName

The role of the Snowflake user: PUBLIC, SYSADMIN, or ACCOUNTADMIN.

Data Type

string

Default Value

""

Remarks

The role of the Snowflake user using the specified database. The defaults in Snowflake are: PUBLIC, SYSADMIN, or ACCOUNTADMIN. A custom role may also be specified.

CredentialsLocation

The location of the settings file where credentials are saved.

Data Type

string

Default Value

"%APPDATA%\CData\Snowflake Data Provider\CredentialsFile.txt"

Remarks

If left unspecified, the default location is "%APPDATA%\CData\Snowflake Data Provider\CredentialsFile.txt" with %APPDATA% being set to the user's configuration directory:

| Platform | %APPDATA% |

| Windows | The value of the APPDATA environment variable |

| Mac | ~/Library/Application Support |

| Linux | ~/.config |

UseVirtualHosting

If true (default), buckets will be referenced in the request using the hosted-style request: http://yourbucket.s3.amazonaws.com/yourobject. If set to false, the bean will use the path-style request: http://s3.amazonaws.com/yourbucket/yourobject. Note that this property will be set to false, in case of an S3 based custom service when the CustomURL is specified.

Data Type

bool

Default Value

true

Remarks

If true (default), buckets will be referenced in the request using the hosted-style request: http://yourbucket.s3.amazonaws.com/yourobject. If set to false, the bean will use the path-style request: http://s3.amazonaws.com/yourbucket/yourobject. Note that this property will be set to false, in case of an S3 based custom service when the CustomURL is specified.

AzureTenant

The Microsoft Online tenant being used to access data. If not specified, your default tentant will be used.

Data Type

string

Default Value

""

Remarks

The Microsoft Online tenant being used to access data. For instance, contoso.onmicrosoft.com. Alternatively, specify the tenant id. This value is the directory Id in the Azure Portal > Azure Active Directory > Properties.

Typically it is not necessary to specify the Tenant. This can be automatically determined by Microsoft when using the OAuthGrantType set to CODE (default). However, it may fail in the case that the user belongs to multiple tenants. For instance, if an Admin of domain A invites a user of domain B to be a guest user. The user will now belong to both tenants. It is a good practice to specify the Tenant, although in general things should normally work without having to specify it.

The AzureTenant is required when setting OAuthGrantType to CLIENT. When using client credentials, there is no user context. The credentials are taken from the context of the app itself. While Microsoft still allows client credentials to be obtained without specifying which Tenant, it has a much lower probability of picking the specific tenant you want to work with. For this reason, we require AzureTenant to be explicitly stated for all client credentials connections to ensure you get credentials that are applicable for the domain you intend to connect to.

ProofKey

The ProofKey for authentication with Snowflake database. This is usually derived from GetSSOAuthorizationURL call.

Data Type

string

Default Value

""

Remarks

ExternalToken

The External Token for authentication with the Snowflake database. This is usually derived from the external handler. For example, handle the callback URL from procedure GetSSOAuthorizationURL will get this token.

Data Type

string

Default Value

""

Remarks

SSOProperties

Additional properties required to connect to the identity provider in a semicolon-separated list.

Data Type

string

Default Value

""

Remarks

Additional properties required to connect to the identity provider in a semicolon-separated list. The following sections provide examples using the Okta provider.

OKTA

- Domain is the Okta domain you are signing in with, for example: myorg.okta.com.

- APIToken is your Okta API token. In most cases it is unnecessary but can be provided if needed.

PrivateKey

The private key provided for key pair authentication with Snowflake.

Data Type

string

Default Value

""

Remarks

The path to the file containing the private key or the name of the certificate store for the client certificate. The PrivateKeyType field specifies the type of the certificate store specified by PrivateKey. If the store is password protected, specify the password in PrivateKeyPassword.

When the certificate store type is PEMKEY_FILE, PFXFILE, etc., this property must be set to the path to the file. When the type is PEMKEY_BLOB, PFXBLOB, etc., the property must be set to the binary contents of the file.

Designations of certificate stores are platform-dependent.

The following are designations of the most common User and Machine certificate stores in Windows:

| MY | A certificate store holding personal certificates with their associated private keys. |

| CA | Certifying authority certificates. |

| ROOT | Root certificates. |

| SPC | Software publisher certificates. |

In Java, the certificate store normally is a file containing certificates and optional private keys.

PrivateKeyPassword

The password for the private key specified in the PrivateKey property, if required.

Data Type

string

Default Value

""

Remarks

The password for the private key specified in the PrivateKey property, if required.

PrivateKeyType

The type of key store containing the private key to use with key pair authentication.

Possible Values

USER, MACHINE, PFXFILE, PFXBLOB, JKSFILE, JKSBLOB, PEMKEY_FILE, PEMKEY_BLOB, PUBLIC_KEY_FILE, PUBLIC_KEY_BLOB, SSHPUBLIC_KEY_FILE, SSHPUBLIC_KEY_BLOB, P7BFILE, PPKFILE, XMLFILE, XMLBLOBData Type

string

Default Value

"USER"

Remarks

This property can take one of the following values:

| USER - default | For Windows, this specifies that the certificate store is a certificate store owned by the current user. Note that this store type is not available in Java. |

| MACHINE | For Windows, this specifies that the certificate store is a machine store. Note that this store type is not available in Java. |

| PFXFILE | The certificate store is the name of a PFX (PKCS12) file containing certificates. |

| PFXBLOB | The certificate store is a string (base-64-encoded) representing a certificate store in PFX (PKCS12) format. |

| JKSFILE | The certificate store is the name of a Java key store (JKS) file containing certificates. Note that this store type is only available in Java. |

| JKSBLOB | The certificate store is a string (base-64-encoded) representing a certificate store in JKS format. Note that this store type is only available in Java. |

| PEMKEY_FILE | The certificate store is the name of a PEM-encoded file that contains a private key and an optional certificate. |

| PEMKEY_BLOB | The certificate store is a string (base64-encoded) that contains a private key and an optional certificate. |

| PUBLIC_KEY_FILE | The certificate store is the name of a file that contains a PEM- or DER-encoded public key certificate. |

| PUBLIC_KEY_BLOB | The certificate store is a string (base-64-encoded) that contains a PEM- or DER-encoded public key certificate. |

| SSHPUBLIC_KEY_FILE | The certificate store is the name of a file that contains an SSH-style public key. |

| SSHPUBLIC_KEY_BLOB | The certificate store is a string (base-64-encoded) that contains an SSH-style public key. |

| P7BFILE | The certificate store is the name of a PKCS7 file containing certificates. |

| PPKFILE | The certificate store is the name of a file that contains a PuTTY Private Key (PPK). |

| XMLFILE | The certificate store is the name of a file that contains a certificate in XML format. |

| XMLBLOB | The certificate store is a string that contains a certificate in XML format. |

InitiateOAuth

Set this property to initiate the process to obtain or refresh the OAuth access token when you connect.

Possible Values

OFF, GETANDREFRESH, REFRESHData Type

string

Default Value

"OFF"

Remarks

The following options are available:

- OFF: Indicates that the OAuth flow will be handled entirely by the user. An OAuthAccessToken will be required to authenticate.

- GETANDREFRESH: Indicates that the entire OAuth Flow will be handled by the driver. If no token currently exists, it will be obtained by prompting the user via the browser. If a token exists, it will be refreshed when applicable.

- REFRESH: Indicates that the driver will only handle refreshing the OAuthAccessToken. The user will never be prompted by the driver to authenticate via the browser. The user must handle obtaining the OAuthAccessToken and OAuthRefreshToken initially.

OAuthClientId

The client ID assigned when you register your application with an OAuth authorization server.

Data Type

string

Default Value

""

Remarks

As part of registering an OAuth application, you will receive the OAuthClientId value, sometimes also called a consumer key, and a client secret, the OAuthClientSecret.

OAuthClientSecret

The client secret assigned when you register your application with an OAuth authorization server.

Data Type

string

Default Value

""

Remarks

As part of registering an OAuth application, you will receive the OAuthClientId, also called a consumer key. You will also receive a client secret, also called a consumer secret. Set the client secret in the OAuthClientSecret property.

OAuthAccessToken

The access token for connecting using OAuth.

Data Type

string

Default Value

""

Remarks

The OAuthAccessToken property is used to connect using OAuth. The OAuthAccessToken is retrieved from the OAuth server as part of the authentication process. It has a server-dependent timeout and can be reused between requests.

The access token is used in place of your user name and password. The access token protects your credentials by keeping them on the server.

CallbackURL

The OAuth callback URL to return to when authenticating. This value must match the callback URL you specify in your Add-In settings.

Data Type

string

Default Value

""

Remarks

During the authentication process, the OAuth authorization server redirects the user to this URL. This value must match the callback URL you specify in your Add-In settings.

State

An optional value that has meaning for your OAuth App.

Data Type

string

Default Value

""

Remarks

Used in OAuth authentication: This is an optional value that has meaning for your OAuth App.

OAuthSettingsLocation

The location of the settings file where OAuth values are saved when InitiateOAuth is set to GETANDREFRESH or REFRESH. Alternatively, this can be held in memory by specifying a value starting with memory://.

Data Type

string

Default Value

"%APPDATA%\CData\Snowflake Data Provider\OAuthSettings.txt"

Remarks

When InitiateOAuth is set to GETANDREFRESH or REFRESH, the driver saves OAuth values to avoid requiring the user to manually enter OAuth connection properties and allowing the credentials to be shared across connections or processes.

Alternatively to specifying a file path, memory storage can be used instead. Memory locations are specified by using a value starting with 'memory://' followed by a unique identifier for that set of credentials (ex: memory://user1). The identifier can be anything you choose but should be unique to the user. Unlike with the file based storage, you must manually store the credentials when closing the connection with memory storage to be able to set them in the connection when the process is started again. The OAuth property values can be retrieved with a query to the sys_connection_props system table. If there are multiple connections using the same credentials, the properties should be read from the last connection to be closed.

If left unspecified, the default location is "%APPDATA%\CData\Snowflake Data Provider\OAuthSettings.txt" with %APPDATA% being set to the user's configuration directory:

| Platform | %APPDATA% |

| Windows | The value of the APPDATA environment variable |

| Mac | ~/Library/Application Support |

| Linux | ~/.config |

OAuthAuthenticator

This determines the authenticator that the OAuth application requests from Snowflake.

Possible Values

None, Azure, OKTAData Type

string

Default Value

"None"

Remarks

This determines the authenticator that the OAuth application requests from Snowflake.

Scope

This determines the scopes that the OAuth application requests from Snowflake.

Data Type

string

Default Value

""

Remarks

Specify scope to obtain the initial access and refresh token.

By default the driver will request that the user authorize all available scopes. If you want to override this, you can set this property to a space-separated list of OAuth scopes.

OAuthAuthorizationURL

The authorization URL for the OAuth service.

Data Type

string

Default Value

""

Remarks

The authorization URL for the OAuth service. At this URL, the user logs into the server and grants permissions to the application. In OAuth 1.0, if permissions are granted, the request token is authorized.

OAuthAccessTokenURL

The URL to retrieve the OAuth access token from.

Data Type

string

Default Value

""

Remarks

The URL to retrieve the OAuth access token from. In OAuth 1.0, the authorized request token is exchanged for the access token at this URL.

OAuthVerifier

The verifier code returned from the OAuth authorization URL.

Data Type

string

Default Value

""

Remarks

The verifier code returned from the OAuth authorization URL. This can be used on systems where a browser cannot be launched such as headless systems.

Authentication on Headless Machines

See Establishing a Connection to obtain the OAuthVerifier value.

Set OAuthSettingsLocation along with OAuthVerifier. When you connect, the driver exchanges the OAuthVerifier for the OAuth authentication tokens and saves them, encrypted, to the specified file. Set InitiateOAuth to GETANDREFRESH automate the exchange.

Once the OAuth settings file has been generated, you can remove OAuthVerifier from the connection properties and connect with OAuthSettingsLocation set.

To automatically refresh the OAuth token values, set OAuthSettingsLocation and additionally set InitiateOAuth to REFRESH.

PKCEVerifier

A random value used as input for calling GetOAuthAccessToken in the PKCE flow.

Data Type

string

Default Value

""

Remarks

This is usually derived from GetOAuthAuthorizationUrl call.

OAuthRefreshToken

The OAuth refresh token for the corresponding OAuth access token.

Data Type

string

Default Value

""

Remarks

The OAuthRefreshToken property is used to refresh the OAuthAccessToken when using OAuth authentication.

OAuthExpiresIn

The lifetime in seconds of the OAuth AccessToken.

Data Type

string

Default Value

""

Remarks

Pair with OAuthTokenTimestamp to determine when the AccessToken will expire.

OAuthTokenTimestamp

The Unix epoch timestamp in milliseconds when the current Access Token was created.

Data Type

string

Default Value

""

Remarks

Pair with OAuthExpiresIn to determine when the AccessToken will expire.

SSLServerCert

The certificate to be accepted from the server when connecting using TLS/SSL.

Data Type

string

Default Value

""

Remarks

If using a TLS/SSL connection, this property can be used to specify the TLS/SSL certificate to be accepted from the server. Any other certificate that is not trusted by the machine is rejected.

This property can take the following forms:

| Description | Example |

| A full PEM Certificate (example shortened for brevity) | -----BEGIN CERTIFICATE----- MIIChTCCAe4CAQAwDQYJKoZIhv......Qw== -----END CERTIFICATE----- |

| A path to a local file containing the certificate | C:cert.cer |

| The public key (example shortened for brevity) | -----BEGIN RSA PUBLIC KEY----- MIGfMA0GCSq......AQAB -----END RSA PUBLIC KEY----- |

| The MD5 Thumbprint (hex values can also be either space or colon separated) | ecadbdda5a1529c58a1e9e09828d70e4 |

| The SHA1 Thumbprint (hex values can also be either space or colon separated) | 34a929226ae0819f2ec14b4a3d904f801cbb150d |

If not specified, any certificate trusted by the machine is accepted.

Certificates are validated as trusted by the machine based on the System's trust store. The trust store used is the 'javax.net.ssl.trustStore' value specified for the system. If no value is specified for this property, Java's default trust store is used (for example, JAVA_HOMElibsecuritycacerts).

Use '*' to signify to accept all certificates. Note that this is not recommended due to security concerns.

FirewallType

The protocol used by a proxy-based firewall.

Possible Values

NONE, TUNNEL, SOCKS4, SOCKS5Data Type

string

Default Value

"NONE"

Remarks

This property specifies the protocol that the driver will use to tunnel traffic through the FirewallServer proxy. Note that by default, the driver connects to the system proxy; to disable this behavior and connect to one of the following proxy types, set ProxyAutoDetect to false.

| Type | Default Port | Description |

| TUNNEL | 80 | When this is set, the driver opens a connection to Snowflake and traffic flows back and forth through the proxy. |

| SOCKS4 | 1080 | When this is set, the driver sends data through the SOCKS 4 proxy specified by FirewallServer and FirewallPort and passes the FirewallUser value to the proxy, which determines if the connection request should be granted. |

| SOCKS5 | 1080 | When this is set, the driver sends data through the SOCKS 5 proxy specified by FirewallServer and FirewallPort. If your proxy requires authentication, set FirewallUser and FirewallPassword to credentials the proxy recognizes. |

To connect to HTTP proxies, use ProxyServer and ProxyPort. To authenticate to HTTP proxies, use ProxyAuthScheme, ProxyUser, and ProxyPassword.

FirewallServer

The name or IP address of a proxy-based firewall.

Data Type

string

Default Value

""

Remarks

This property specifies the IP address, DNS name, or host name of a proxy allowing traversal of a firewall. The protocol is specified by FirewallType: Use FirewallServer with this property to connect through SOCKS or do tunneling. Use ProxyServer to connect to an HTTP proxy.

Note that the driver uses the system proxy by default. To use a different proxy, set ProxyAutoDetect to false.

FirewallPort

The TCP port for a proxy-based firewall.

Data Type

int

Default Value

0

Remarks

This specifies the TCP port for a proxy allowing traversal of a firewall. Use FirewallServer to specify the name or IP address. Specify the protocol with FirewallType.

FirewallUser

The user name to use to authenticate with a proxy-based firewall.

Data Type

string

Default Value

""

Remarks

The FirewallUser and FirewallPassword properties are used to authenticate against the proxy specified in FirewallServer and FirewallPort, following the authentication method specified in FirewallType.

FirewallPassword

A password used to authenticate to a proxy-based firewall.

Data Type

string

Default Value

""

Remarks

This property is passed to the proxy specified by FirewallServer and FirewallPort, following the authentication method specified by FirewallType.

ProxyAutoDetect

This indicates whether to use the system proxy settings or not. This takes precedence over other proxy settings, so you'll need to set ProxyAutoDetect to FALSE in order use custom proxy settings.

Data Type

bool

Default Value

false

Remarks

This takes precedence over other proxy settings, so you'll need to set ProxyAutoDetect to FALSE in order use custom proxy settings.

NOTE: When this property is set to True, the proxy used is determined as follows:

- A search from the JVM properties (http.proxy, https.proxy, socksProxy, etc.) is performed.

- In the case that the JVM properties don't exist, a search from java.home/lib/net.properties is performed.

- In the case that java.net.useSystemProxies is set to True, a search from the SystemProxy is performed.

- In Windows only, an attempt is made to retrieve these properties from the Internet Options in the registry.

To connect to an HTTP proxy, see ProxyServer. For other proxies, such as SOCKS or tunneling, see FirewallType.

ProxyServer

The hostname or IP address of a proxy to route HTTP traffic through.

Data Type

string

Default Value

""

Remarks

The hostname or IP address of a proxy to route HTTP traffic through. The driver can use the HTTP, Windows (NTLM), or Kerberos authentication types to authenticate to an HTTP proxy.

If you need to connect through a SOCKS proxy or tunnel the connection, see FirewallType.

By default, the driver uses the system proxy. If you need to use another proxy, set ProxyAutoDetect to false.

ProxyPort

The TCP port the ProxyServer proxy is running on.

Data Type

int

Default Value

80

Remarks

The port the HTTP proxy is running on that you want to redirect HTTP traffic through. Specify the HTTP proxy in ProxyServer. For other proxy types, see FirewallType.

ProxyAuthScheme

The authentication type to use to authenticate to the ProxyServer proxy.

Possible Values

BASIC, DIGEST, NONE, NEGOTIATE, NTLM, PROPRIETARYData Type

string

Default Value

"BASIC"

Remarks

This value specifies the authentication type to use to authenticate to the HTTP proxy specified by ProxyServer and ProxyPort.

Note that the driver will use the system proxy settings by default, without further configuration needed; if you want to connect to another proxy, you will need to set ProxyAutoDetect to false, in addition to ProxyServer and ProxyPort. To authenticate, set ProxyAuthScheme and set ProxyUser and ProxyPassword, if needed.

The authentication type can be one of the following:

- BASIC: The driver performs HTTP BASIC authentication.

- DIGEST: The driver performs HTTP DIGEST authentication.

- NEGOTIATE: The driver retrieves an NTLM or Kerberos token based on the applicable protocol for authentication.

- PROPRIETARY: The driver does not generate an NTLM or Kerberos token. You must supply this token in the Authorization header of the HTTP request.

If you need to use another authentication type, such as SOCKS 5 authentication, see FirewallType.

ProxyUser

A user name to be used to authenticate to the ProxyServer proxy.

Data Type

string

Default Value

""

Remarks

The ProxyUser and ProxyPassword options are used to connect and authenticate against the HTTP proxy specified in ProxyServer.

You can select one of the available authentication types in ProxyAuthScheme. If you are using HTTP authentication, set this to the user name of a user recognized by the HTTP proxy. If you are using Windows or Kerberos authentication, set this property to a user name in one of the following formats:

user@domain domainuser

ProxyPassword

A password to be used to authenticate to the ProxyServer proxy.

Data Type

string

Default Value

""

Remarks

This property is used to authenticate to an HTTP proxy server that supports NTLM (Windows), Kerberos, or HTTP authentication. To specify the HTTP proxy, you can set ProxyServer and ProxyPort. To specify the authentication type, set ProxyAuthScheme.

If you are using HTTP authentication, additionally set ProxyUser and ProxyPassword to HTTP proxy.

If you are using NTLM authentication, set ProxyUser and ProxyPassword to your Windows password. You may also need these to complete Kerberos authentication.

For SOCKS 5 authentication or tunneling, see FirewallType.

By default, the driver uses the system proxy. If you want to connect to another proxy, set ProxyAutoDetect to false.

ProxySSLType

The SSL type to use when connecting to the ProxyServer proxy.

Possible Values

AUTO, ALWAYS, NEVER, TUNNELData Type

string

Default Value

"AUTO"

Remarks

This property determines when to use SSL for the connection to an HTTP proxy specified by ProxyServer. This value can be AUTO, ALWAYS, NEVER, or TUNNEL. The applicable values are the following:

| AUTO | Default setting. If the URL is an HTTPS URL, the driver will use the TUNNEL option. If the URL is an HTTP URL, the component will use the NEVER option. |

| ALWAYS | The connection is always SSL enabled. |

| NEVER | The connection is not SSL enabled. |

| TUNNEL | The connection is through a tunneling proxy. The proxy server opens a connection to the remote host and traffic flows back and forth through the proxy. |

ProxyExceptions

A semicolon separated list of destination hostnames or IPs that are exempt from connecting through the ProxyServer .

Data Type

string

Default Value

""

Remarks

The ProxyServer is used for all addresses, except for addresses defined in this property. Use semicolons to separate entries.

Note that the driver uses the system proxy settings by default, without further configuration needed; if you want to explicitly configure proxy exceptions for this connection, you need to set ProxyAutoDetect = false, and configure ProxyServer and ProxyPort. To authenticate, set ProxyAuthScheme and set ProxyUser and ProxyPassword, if needed.

Logfile

A filepath which designates the name and location of the log file.

Data Type

string

Default Value

""

Remarks

Once this property is set, the driver will populate the log file as it carries out various tasks, such as when authentication is performed or queries are executed. If the specified file doesn't already exist, it will be created.

Connection strings and version information are also logged, though connection properties containing sensitive information are masked automatically.

If a relative filepath is supplied, the location of the log file will be resolved based on the path found in the Location connection property.

For more control over what is written to the log file, you can adjust the Verbosity property.

Log contents are categorized into several modules. You can show/hide individual modules using the LogModules property.

To edit the maximum size of a single logfile before a new one is created, see MaxLogFileSize.

If you would like to place a cap on the number of logfiles generated, use MaxLogFileCount.

Java Logging

Java logging is also supported. To enable Java logging, set Logfile to:

Logfile=JAVALOG://myloggername

As in the above sample, JAVALOG:// is a required prefix to use Java logging, and you will substitute your own Logger.

The supplied Logger's getLogger method is then called, using the supplied value to create the Logger instance. If a logging instance already exists, it will reference the existing instance.

When Java logging is enabled, the Verbosity will now correspond to specific logging levels.

Verbosity

The verbosity level that determines the amount of detail included in the log file.

Data Type

string

Default Value

"1"

Remarks

The verbosity level determines the amount of detail that the driver reports to the Logfile. Verbosity levels from 1 to 5 are supported. These are detailed in the Logging page.

LogModules

Core modules to be included in the log file.

Data Type

string

Default Value

""

Remarks

Only the modules specified (separated by ';') will be included in the log file. By default all modules are included.

See the Logging page for an overview.

MaxLogFileSize

A string specifying the maximum size in bytes for a log file (for example, 10 MB).

Data Type

string

Default Value

"100MB"

Remarks

When the limit is hit, a new log is created in the same folder with the date and time appended to the end. The default limit is 100 MB. Values lower than 100 kB will use 100 kB as the value instead.

Adjust the maximum number of logfiles generated with MaxLogFileCount.

MaxLogFileCount

A string specifying the maximum file count of log files.

Data Type

int

Default Value

-1

Remarks

When the limit is hit, a new log is created in the same folder with the date and time appended to the end and the oldest log file will be deleted.

The minimum supported value is 2. A value of 0 or a negative value indicates no limit on the count.

Adjust the maximum size of the logfiles generated with MaxLogFileSize.

Location

A path to the directory that contains the schema files defining tables, views, and stored procedures.

Data Type

string

Default Value

"%APPDATA%\CData\Snowflake Data Provider\Schema"

Remarks

The path to a directory which contains the schema files for the driver (.rsd files for tables and views, .rsb files for stored procedures). The folder location can be a relative path from the location of the executable. The Location property is only needed if you want to customize definitions (for example, change a column name, ignore a column, and so on) or extend the data model with new tables, views, or stored procedures.

If left unspecified, the default location is "%APPDATA%\CData\Snowflake Data Provider\Schema" with %APPDATA% being set to the user's configuration directory:

| Platform | %APPDATA% |

| Windows | The value of the APPDATA environment variable |

| Mac | ~/Library/Application Support |

| Linux | ~/.config |

BrowsableSchemas

This property restricts the schemas reported to a subset of the available schemas. For example, BrowsableSchemas=SchemaA,SchemaB,SchemaC.

Data Type

string

Default Value

""

Remarks

Listing the schemas from databases can be expensive. Providing a list of schemas in the connection string improves the performance.

Tables

This property restricts the tables reported to a subset of the available tables. For example, Tables=TableA,TableB,TableC.

Data Type

string

Default Value

""

Remarks

Listing the tables from some databases can be expensive. Providing a list of tables in the connection string improves the performance of the driver.

This property can also be used as an alternative to automatically listing views if you already know which ones you want to work with and there would otherwise be too many to work with.

Specify the tables you want in a comma-separated list. Each table should be a valid SQL identifier with any special characters escaped using square brackets, double-quotes or backticks. For example, Tables=TableA,[TableB/WithSlash],WithCatalog.WithSchema.`TableC With Space`.

Note that when connecting to a data source with multiple schemas or catalogs, you will need to provide the fully qualified name of the table in this property, as in the last example here, to avoid ambiguity between tables that exist in multiple catalogs or schemas.

Views

Restricts the views reported to a subset of the available tables. For example, Views=ViewA,ViewB,ViewC.

Data Type

string

Default Value

""

Remarks

Listing the views from some databases can be expensive. Providing a list of views in the connection string improves the performance of the driver.

This property can also be used as an alternative to automatically listing views if you already know which ones you want to work with and there would otherwise be too many to work with.

Specify the views you want in a comma-separated list. Each view should be a valid SQL identifier with any special characters escaped using square brackets, double-quotes or backticks. For example, Views=ViewA,[ViewB/WithSlash],WithCatalog.WithSchema.`ViewC With Space`.

Note that when connecting to a data source with multiple schemas or catalogs, you will need to provide the fully qualified name of the table in this property, as in the last example here, to avoid ambiguity between tables that exist in multiple catalogs or schemas.

Database

The name of the Snowflake database.

Data Type

string

Default Value

""

Remarks

The name of the Snowflake database.

Schema

The schema of the Snowflake database.

Data Type

string

Default Value

""

Remarks

The schema of the Snowflake database.

AutoCache

Automatically caches the results of SELECT queries into a cache database specified by either CacheLocation or both of CacheConnection and CacheProvider .

Data Type

bool

Default Value

false

Remarks

When AutoCache = true, the driver automatically maintains a cache of your table's data in the database of your choice.

Setting the Caching Database

When AutoCache = true, the driver caches to a simple, file-based cache. You can configure its location or cache to a different database with the following properties:

- CacheLocation: Specifies the path to the file store.

- CacheDriver and CacheConnection: Specifies a driver to a database and the connection string.

See Also

- CacheMetadata: This property reduces the amount of metadata that crosses the network by persisting table schemas retrieved from the Snowflake metadata. Metadata then needs to be retrieved only once instead of every connection.

- Explicitly Caching Data: This section provides more examples of using AutoCache in Offline mode.

- CACHE Statements: You can use the CACHE statement to persist any SELECT query, as well as manage the cache; for example, refreshing schemas.

CacheDriver

The database driver to be used to cache data.

Data Type

string

Default Value

""

Remarks

You can cache to any database for which you have a JDBC driver, including CData JDBC drivers.

The cache database is determined based on the CacheDriver and CacheConnection properties. The CacheDriver is the name of the JDBC driver class that you want to use to cache data.

Note that you must also add the CacheDriver JAR file to the classpath.

The following examples show how to cache to several major databases. Refer to CacheConnection for more information on the JDBC URL syntax and typical connection properties.

Derby and Java DB

The driver simplifies Derby configuration. Java DB is the Oracle distribution of Derby. The JAR file is shipped in the JDK. You can find the JAR file, derby.jar, in the db subfolder of the JDK installation. In most caching scenarios, you need to specify only the following, after adding derby.jar to the classpath:

jdbc:snowflake:CacheLocation='c:/Temp/cachedir';url=https://myaccount.region.snowflakecomputing.com;user=Admin;password=test123;Database=Northwind;Warehouse=TestWarehouse;Account=Tester1;To customize the Derby JDBC URL, use CacheDriver and CacheConnection. For example, to cache to an in-memory database, use a JDBC URL like the following:

jdbc:snowflake:CacheDriver=org.apache.derby.jdbc.EmbeddedDriver;CacheConnection='jdbc:derby:memory';url=https://myaccount.region.snowflakecomputing.com;user=Admin;password=test123;Database=Northwind;Warehouse=TestWarehouse;Account=Tester1;

SQLite

The following is a JDBC URL for the SQLite JDBC driver:

jdbc:snowflake:CacheDriver=org.sqlite.JDBC;CacheConnection='jdbc:sqlite:C:/Temp/sqlite.db';url=https://myaccount.region.snowflakecomputing.com;user=Admin;password=test123;Database=Northwind;Warehouse=TestWarehouse;Account=Tester1;

MySQL

The following is a JDBC URL for the included CData JDBC Driver for MySQL:

jdbc:snowflake:Cache Driver=cdata.jdbc.mysql.MySQLDriver;Cache Connection='jdbc:mysql:Server=localhost;Port=3306;Database=cache;User=root;Password=123456';url=https://myaccount.region.snowflakecomputing.com;user=Admin;password=test123;Database=Northwind;Warehouse=TestWarehouse;Account=Tester1;

The CData JDBC Driver for MySQL is located in the lib subfolder of the CData JDBC Driver for Snowflake installation directory.

SQL Server

The following JDBC URL uses the Microsoft JDBC Driver for SQL Server:

jdbc:snowflake:Cache Driver=com.microsoft.sqlserver.jdbc.SQLServerDriver;Cache Connection='jdbc:sqlserver://localhostsqlexpress:7437;user=sa;password=123456;databaseName=Cache';url=https://myaccount.region.snowflakecomputing.com;user=Admin;password=test123;Database=Northwind;Warehouse=TestWarehouse;Account=Tester1;

Oracle

The following is a JDBC URL for the Oracle Thin Client:

jdbc:snowflake:Cache Driver=oracle.jdbc.OracleDriver;CacheConnection='jdbc:oracle:thin:scott/tiger@localhost:1521:orcldb';url=https://myaccount.region.snowflakecomputing.com;user=Admin;password=test123;Database=Northwind;Warehouse=TestWarehouse;Account=Tester1;

NOTE: If using a version of Oracle older than 9i, the cache driver will instead be oracle.jdbc.driver.OracleDriver .

PostgreSQL

The following JDBC URL uses the official PostgreSQL JDBC driver:

jdbc:snowflake:CacheDriver=org.postgresql.Driver;CacheConnection='jdbc:postgresql://localhost:5433/postgres?user=postgres&password=admin';url=https://myaccount.region.snowflakecomputing.com;user=Admin;password=test123;Database=Northwind;Warehouse=TestWarehouse;Account=Tester1;

CacheConnection

The connection string for the cache database. This property is always used in conjunction with CacheProvider . Setting both properties will override the value set for CacheLocation for caching data.

Data Type

string

Default Value

""

Remarks

The cache database is determined based on the CacheDriver and CacheConnection properties. Both properties are required to use the cache database. Examples of common cache database settings can be found below. For more information on setting the caching database's driver, refer to CacheDriver.

The connection string specified in the CacheConnection property is passed directly to the underlying CacheDriver. Consult the documentation for the specific JDBC driver for more information on the available properties. Make sure to include the JDBC driver in your application's classpath.

Derby and Java DB

The driver simplifies caching to Derby, only requiring you to set the CacheLocation property to make a basic connection.

Alternatively, you can configure the connection to Derby manually using CacheDriver and CacheConnection. The following is the Derby JDBC URL syntax:

jdbc:derby:[subsubprotocol:][databaseName][;attribute=value[;attribute=value] ... ]

For example, to cache to an in-memory database, use the following:

jdbc:derby:memory

SQLite

To cache to SQLite, you can use the SQLite JDBC driver. The following is the syntax of the JDBC URL:

jdbc:sqlite:dataSource

- Data Source: The path to an SQLite database file. Or, use a value of :memory to cache in memory.

MySQL

The installation includes the CData JDBC Driver for MySQL. The following is an example JDBC URL:

jdbc:mysql:User=root;Password=root;Server=localhost;Port=3306;Database=cache

The following are typical connection properties:

- Server: The IP address or domain name of the server you want to connect to.

- Port: The port that the server is running on.

- User: The user name provided for authentication to the database.

- Password: The password provided for authentication to the database.

- Database: The name of the database.

SQL Server

The JDBC URL for the Microsoft JDBC Driver for SQL Server has the following syntax:

jdbc:sqlserver://[serverName[instance][:port]][;database=databaseName][;property=value[;property=value] ... ]

For example:

jdbc:sqlserver://localhostsqlexpress:1433;integratedSecurity=true

The following are typical SQL Server connection properties:

- Server: The name or network address of the computer running SQL Server. To connect to a named instance instead of the default instance, this property can be used to specify the host name and the instance, separated by a backslash.

- Port: The port SQL Server is running on.

- Database: The name of the SQL Server database.

- Integrated Security: Set this option to true to use the current Windows account for authentication. Set this option to false if you are setting the User and Password in the connection.

To use integrated security, you will also need to add sqljdbc_auth.dll to a folder on the Windows system path. This file is located in the auth subfolder of the Microsoft JDBC Driver for SQL Server installation. The bitness of the assembly must match the bitness of your JVM.

- User ID: The user name provided for authentication with SQL Server. This property is only needed if you are not using integrated security.

- Password: The password provided for authentication with SQL Server. This property is only needed if you are not using integrated security.

Oracle

The following is the conventional JDBC URL syntax for the Oracle JDBC Thin driver:

jdbc:oracle:thin:[userId/password]@[//]host[[:port][:sid]]

For example:

jdbc:oracle:thin:scott/tiger@myhost:1521:orcl

The following are typical connection properties:

-

Data Source: The connect descriptor that identifies the Oracle database. This can be a TNS connect descriptor, an Oracle Net Services name that resolves to a connect descriptor, or, after version 11g, an Easy Connect naming (the host name of the Oracle server with an optional port and service name).

- Password: The password provided for authentication with the Oracle database.

- User Id: The user Id provided for authentication with the Oracle database.

PostgreSQL

The following is the JDBC URL syntax for the official PostgreSQL JDBC driver:

jdbc:postgresql:[//[host[:port]]/]database[[?option=value][[&option=value][&option=value] ... ]]

For example, the following connection string connects to a database on the default host (localhost) and port (5432):

jdbc:postgresql:postgres

The following are typical connection properties:

- Host: The address of the server hosting the PostgreSQL database.

- Port: The port used to connect to the server hosting the PostgreSQL database.

- Database: The name of the database.

- User name: The user Id provided for authentication with the PostgreSQL database. You can specify this in the JDBC URL with the "user" parameter.

- Password: The password provided for authentication with the PostgreSQL database.

CacheLocation

Specifies the path to the cache when caching to a file.

Data Type

string

Default Value

"%APPDATA%\CData\Snowflake Data Provider"

Remarks

The CacheLocation is a simple, file-based cache. The driver uses Java DB, Oracle's distribution of the Derby database. To cache to Java DB, you will need to add the Java DB JAR file to the classpath. The JAR file, derby.jar, is shipped in the JDK and located in the db subfolder of the JDK installation.

If left unspecified, the default location is "%APPDATA%\CData\Snowflake Data Provider" with %APPDATA% being set to the user's configuration directory:

| Platform | %APPDATA% |

| Windows | The value of the APPDATA environment variable |

| Mac | ~/Library/Application Support |

| Linux | ~/.config |

See Also

- AutoCache: Set to implicitly create and maintain a cache for later offline use.

- CacheMetadata: Set to persist the Snowflake catalog in CacheLocation.

CacheTolerance

The tolerance for stale data in the cache specified in seconds when using AutoCache .

Data Type

int

Default Value

600

Remarks

The tolerance for stale data in the cache specified in seconds. This only applies when AutoCache is used. The driver checks with the data source for newer records after the tolerance interval has expired. Otherwise, it returns the data directly from the cache.

Offline

Use offline mode to get the data from the cache instead of the live source.

Data Type

bool

Default Value

false

Remarks

When Offline = true, all queries execute against the cache as opposed to the live data source. In this mode, certain queries like INSERT, UPDATE, DELETE, and CACHE are not allowed.

CacheMetadata

This property determines whether or not to cache the table metadata to a file store.

Data Type

bool

Default Value

false

Remarks

As you execute queries with this property set, table metadata in the Snowflake catalog are cached to the file store specified by CacheLocation if set or the user's home directory otherwise. A table's metadata will be retrieved only once, when the table is queried for the first time.

When to Use CacheMetadata

The driver automatically persists metadata in memory for up to two hours when you first discover the metadata for a table or view and therefore, CacheMetadata is generally not required. CacheMetadata becomes useful when metadata operations are expensive such as when you are working with large amounts of metadata or when you have many short-lived connections.

When Not to Use CacheMetadata

- When you are working with volatile metadata: Metadata for a table is only retrieved the first time the connection to the table is made. To pick up new, changed, or deleted columns, you would need to delete and rebuild the metadata cache. Therefore, it is best to rely on the in-memory caching for cases where metadata changes often.

- When you are caching to a database: CacheMetadata can only be used with CacheLocation. If you are caching to another database with the CacheDriver and CacheConnection properties, use AutoCache to cache implicitly. Or, use CACHE Statements to cache explicitly.

AllowPreparedStatement

Prepare a query statement before its execution.

Data Type

bool

Default Value

false

Remarks

If the AllowPreparedStatement property is set to false, statements are parsed each time they are executed. Setting this property to false can be useful if you are executing many different queries only once.

If you are executing the same query repeatedly, you will generally see better performance by leaving this property at the default, true. Preparing the query avoids recompiling the same query over and over. However, prepared statements also require the driver to keep the connection active and open while the statement is prepared.

ApplicationName

The application name connection string property expresses the client application's name.

Data Type

string

Default Value

""

Remarks

The application name connection string property expresses the client application's name.

AsyncQueryTimeout

The timeout for asynchronous requests issued by the provider to download large result sets.

Data Type

int

Default Value

300

Remarks

If the AsyncQueryTimeout property is set to 0, asynchronous operations will not time out; instead, they will run until they complete successfully or encounter an error condition. This property is distinct from Timeout which applies to individual HTTP operations while AsyncQueryTimeout applies to execution time of the operation as a whole.

If AsyncQueryTimeout expires and the asynchronous request has not finished being processed, the driver raises an error condition.

BatchSize

The maximum size of each batch operation to submit.

Data Type

int

Default Value

0

Remarks

When BatchSize is set to a value greater than 0, the batch operation will split the entire batch into separate batches of size BatchSize. The split batches will then be submitted to the server individually. This is useful when the server has limitations on the size of the request that can be submitted.

Setting BatchSize to 0 will submit the entire batch as specified.

ConnectionLifeTime

The maximum lifetime of a connection in seconds. Once the time has elapsed, the connection object is disposed.

Data Type

int

Default Value

0

Remarks

The maximum lifetime of a connection in seconds. Once the time has elapsed, the connection object is disposed. The default is 0 which indicates there is no limit to the connection lifetime.

ConnectOnOpen

This property species whether to connect to the Snowflake when the connection is opened.

Data Type

bool

Default Value

false

Remarks

When set to true, a connection will be made to Snowflake when the connection is opened. This property enables the Test Connection feature available in various database tools.

This feature acts as a NOOP command as it is used to verify a connection can be made to Snowflake and nothing from this initial connection is maintained.

Setting this property to false may provide performance improvements (depending upon the number of times a connection is opened).

CustomStage

The name of a custom stage to use during bulk write operations.

Data Type

string

Default Value

""

Remarks

The name of a custom stage to use during bulk write operations. This can be an internal or external stage. If the stage is external, the AWS or Azure credentials must be provided as well via the ExternalStageAWSAccessKey/ExternalStageAWSSecretKey or ExternalStageAzureAccessKey properties.

When this is left unspecified, the driver will generate a temporay stage automatically during the upload process and delete it after the upload is complete.

EnableArrow

Whether to support Apache Arrow.

Data Type

bool

Default Value

true

Remarks

Whether to support Apache Arrow.

ExternalStageAWSAccessKey

Your AWS account access key. Only used when defining a CustomStage for bulk write operations.

Data Type

string

Default Value

""

Remarks

Your AWS account access key. This value is accessible from your AWS security credentials page:

- Sign into the AWS Management console with the credentials for your root account.

- Select your account name or number and select My Security Credentials in the menu that is displayed.

- Click Continue to Security Credentials and expand the Access Keys section to manage or create root account access keys.

ExternalStageAWSSecretKey

Your AWS account secret key. Only used when defining a CustomStage for bulk write operations.

Data Type

string

Default Value

""

Remarks

Your AWS account secret key. This value is accessible from your AWS security credentials page:

- Sign into the AWS Management console with the credentials for your root account.

- Select your account name or number and select My Security Credentials in the menu that is displayed.

- Click Continue to Security Credentials and expand the Access Keys section to manage or create root account access keys.

ExternalStageAzureSASToken

The string value of the Azure Blob shared access signature.

Data Type

string

Default Value

""

Remarks

The string value of the Azure Blob shared access signature.

You can go to "Shared access signature" in "Settings" section for your Azure Blob container through Azure Portal, then click "Generate SAS token and URL" and copy the value from "Blob SAS token" textbox. Please be cautionus to select the proper permission (Create, Write, Delete) in "Permissions" dropdown list and validity of Start and Expiry time before you generate SAS token.

IgnoreCase

Whether to ignore case in identifiers. Default: false.

Data Type

bool

Default Value

false

Remarks

A session parameter that specifies whether Snowflake will treat identifiers as case sensitive. Default: false(case is sensitive).

IncludeTableTypes

If set to true, the provider will report the types of individual tables and views.

Data Type

bool

Default Value

false

Remarks

If set to true, the driver will report the types of individual tables and views.

MaxRows

Limits the number of rows returned rows when no aggregation or group by is used in the query. This helps avoid performance issues at design time.

Data Type

int

Default Value

-1

Remarks

Limits the number of rows returned rows when no aggregation or group by is used in the query. This helps avoid performance issues at design time.

MaxThreads

Specifies the number of concurrent requests.

Data Type

string

Default Value

"5"

Remarks

This property allows you to issue multiple requests simultaneously, thereby improving performance.

MergeDelete

A boolean indicating whether batch DELETE statements should be converted to MERGE statements automatically. Only used when the DELETE statement's where clause contains a table's primary key field only and they are combined with AND logical operator.

Data Type

bool

Default Value

false

Remarks

A boolean indicating whether DELETE statements should be converted to MERGE statements automatically to allow for upsert functionality. This property is primarily intended for use with tools where you have no direct control over the queries being executed. Otherwise, as long as Query Passthrough is True, you could execute the MERGE command directly.

When this property is False, DELETE bulk statements won't executed against the server. When it is set to True and the DELETE query contains the primary key field, the Snowflake will send a MERGE query that will execute an DELETE if match is found in Snowflake. For example this query:

DELETE FROM "Table" WHERE "ID" = 1 AND "NAME" = 'Jerry'Will be sent to Snowflake as the following MERGE request:

MERGE INTO "Table" AS "Target" USING "RTABLE1_TMP_20eca05b-c050-47dd-89bc-81c7f617f877" AS "Source" ON ("Target"."ID" = "Source"."ID" AND "Target"."NAME" = "Source"."NAME")

WHEN MATCHED THEN DELETE

MergeInsert

A boolean indicating whether INSERT statements should be converted to MERGE statements automatically. Only used when the INSERT contains a table's primary key field.

Data Type

bool

Default Value

false

Remarks

A boolean indicating whether INSERT statements should be converted to MERGE statements automatically to allow for upsert functionality. This property is primarily intended for use with tools where you have no direct control over the queries being executed. Otherwise, as long as Query Passthrough is True, you could execute the MERGE command directly.

When this property is False, INSERT statements are executed directly against the server. When it is set to True and the INSERT query contains the primary key field, the Snowflake will send a MERGE query that will execute an INSERT if no match is found in Snowflake or an UPDATE if it is. For example this query:

INSERT INTO "Table" ("ID", "NAME", "AGE") VALUES (1, 'NewName', 10)

Will be sent to Snowflake as the following MERGE request:

MERGE INTO "Table" AS "Target" USING (SELECT 1 AS "ID") AS [Source] ON ("Target"."ID" = "Source"."ID")

WHEN NOT MATCHED THEN INSERT ("ID", "NAME", "AGE") VALUES (1, 'NewName', 10)

WHEN MATCHED THEN UPDATE SET "NAME" = 'NewName', "AGE" = 10

MergeUpdate

A boolean indicating whether batch UPDATE statements should be converted to MERGE statements automatically. Only used when the UPDATE statement's where clause contains a table's primary key field only and they are combined with AND logical operator.

Data Type

bool

Default Value

false

Remarks

A boolean indicating whether UPDATE statements should be converted to MERGE statements automatically to allow for upsert functionality. This property is primarily intended for use with tools where you have no direct control over the queries being executed. Otherwise, as long as Query Passthrough is True, you could execute the MERGE command directly.

When this property is False, UPDATE statements are executed directly against the server. When it is set to True and the UPDATE query contains the primary key field, the Snowflake will send a MERGE query that will execute an INSERT if no match is found in Snowflake or an UPDATE if it is. For example this query:

UPDATE "Table" SET "NAME" = 'NewName', "AGE" = 10 WHERE "ID" = 1Will be sent to Snowflake as the following MERGE request:

MERGE INTO "Table" AS "Target" USING "RTABLE1_TMP_20eca05b-c050-47dd-89bc-81c7f617f877" AS "Source" ON ("Target"."ID" = "Source"."ID")

WHEN MATCHED THEN UPDATE SET "Target"."NAME" = "Source"."NAME", "Target"."AGE" = "Source"."AGE"

Other

These hidden properties are used only in specific use cases.

Data Type

string

Default Value

""

Remarks

The properties listed below are available for specific use cases. Normal driver use cases and functionality should not require these properties.

Specify multiple properties in a semicolon-separated list.

Caching Configuration

| CachePartial=True | Caches only a subset of columns, which you can specify in your query. |

| QueryPassthrough=True | Passes the specified query to the cache database instead of using the SQL parser of the driver. |

Integration and Formatting

| DefaultColumnSize | Sets the default length of string fields when the data source does not provide column length in the metadata. The default value is 2000. |

| ConvertDateTimeToGMT | Determines whether to convert date-time values to GMT, instead of the local time of the machine. |

| RecordToFile=filename | Records the underlying socket data transfer to the specified file. |

Pagesize

The maximum number of results to return per page from Snowflake.

Data Type

int

Default Value

5000

Remarks

The Pagesize property affects the maximum number of results to return per page from Snowflake. Setting a higher value may result in better performance at the cost of additional memory eaten up per page consumed.

PoolIdleTimeout

The allowed idle time for a connection before it is closed.

Data Type

int

Default Value

60

Remarks

The allowed idle time a connection can remain in the pool until the connection is closed. The default is 60 seconds.

PoolMaxSize

The maximum connections in the pool.

Data Type

int

Default Value

100

Remarks

The maximum connections in the pool. The default is 100. To disable this property, set the property value to 0 or less.

PoolMinSize

The minimum number of connections in the pool.

Data Type

int

Default Value

1

Remarks

The minimum number of connections in the pool. The default is 1.

PoolWaitTime

The max seconds to wait for an available connection.

Data Type

int

Default Value

60

Remarks

The max seconds to wait for a connection to become available. If a new connection request is waiting for an available connection and exceeds this time, an error is thrown. By default, new requests wait forever for an available connection.

PseudoColumns

This property indicates whether or not to include pseudo columns as columns to the table.

Data Type

string

Default Value

""

Remarks

This setting is particularly helpful in Entity Framework, which does not allow you to set a value for a pseudo column unless it is a table column. The value of this connection setting is of the format "Table1=Column1, Table1=Column2, Table2=Column3". You can use the "*" character to include all tables and all columns; for example, "*=*".

QueryPassthrough

This option passes the query to the Snowflake server as is.

Data Type

bool

Default Value

false

Remarks

When this is set, queries are passed through directly to Snowflake.

Readonly

You can use this property to enforce read-only access to Snowflake from the provider.

Data Type

bool

Default Value

false

Remarks

If this property is set to true, the driver will allow only SELECT queries. INSERT, UPDATE, DELETE, and stored procedure queries will cause an error to be thrown.

ReplaceInvalidUTF8Chars

Specifies whether to repalce invalid UTF8 characters with a '?'.

Data Type

bool

Default Value

false

Remarks

Specifies whether to repalce invalid UTF8 characters with a '?'

RetryOnS3Timeout

Whether or not to retry when network issues occur at during chunk downloading.

Data Type

bool

Default Value

false

Remarks

Typically if a network issue such as a timeout occurs during chunk downloading of data, the CData JDBC Driver for Snowflake will throw an exception. Set this property to true to cause the CData JDBC Driver for Snowflake to attempt retrying the request before failing.

RTK

The runtime key used for licensing.

Data Type

string

Default Value

""

Remarks

The RTK property may be used to license a build. See the included licensing file to see how to set this property. The runtime key is only available if you purchased an OEM license.

SessionParameters

The session parameters for Snowflake. For example: SessionParameters='QUERY_TAG=MyTag;QUOTED_IDENTIFIERS_IGNORE_CASE=True;';.

Data Type

string

Default Value

""

Remarks

The session parameters for Snowflake. For example: SessionParameters='QUERY_TAG=MyTag;QUOTED_IDENTIFIERS_IGNORE_CASE=True;';

SupportEnhancedSQL

This property enhances SQL functionality beyond what can be supported through the API directly, by enabling in-memory client-side processing.

Data Type

bool

Default Value

false

Remarks

When SupportEnhancedSQL = true, the driver offloads as much of the SELECT statement processing as possible to Snowflake and then processes the rest of the query in memory. In this way, the driver can execute unsupported predicates, joins, and aggregation.

When SupportEnhancedSQL = false, the driver limits SQL execution to what is supported by the Snowflake API.

Execution of Predicates

The driver determines which of the clauses are supported by the data source and then pushes them to the source to get the smallest superset of rows that would satisfy the query. It then filters the rest of the rows locally. The filter operation is streamed, which enables the driver to filter effectively for even very large datasets.

Execution of Joins

The driver uses various techniques to join in memory. The driver trades off memory utilization against the requirement of reading the same table more than once.

Execution of Aggregates

The driver retrieves all rows necessary to process the aggregation in memory.

When SupportEnhancedSQL = true, the driver offloads as much of the SELECT statement processing as possible to Snowflake and then processes the rest of the query in memory. In this way, the driver can execute unsupported predicates, joins, and aggregation.

When SupportEnhancedSQL = false, the driver limits SQL execution to what is supported by the Snowflake API.

Execution of Predicates

The driver determines which of the clauses are supported by the data source and then pushes them to the source to get the smallest superset of rows that would satisfy the query. It then filters the rest of the rows locally. The filter operation is streamed, which enables the driver to filter effectively for even very large datasets.

Execution of Joins

The driver uses various techniques to join in memory. The driver trades off memory utilization against the requirement of reading the same table more than once.

Execution of Aggregates

The driver retrieves all rows necessary to process the aggregation in memory.

Timeout

The value in seconds until the timeout error is thrown, canceling the operation.

Data Type